The Ollama Web UI project was renamed!

I had a look to it as ex ollama web ui

And now its called Open Web UI.

- The Ollama web UI Official Site

- The Ollama web UI Official Site

- The Ollama web UI Source Code at Github

- License: MIT ❤️

Have a look to the docs for further config.

Generally, we just need something simple, like:

docker run -d -p 3000:8080 -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

But there are additional tweaks via env variable:

# docker run -d -p 3000:8080 -e OLLAMA_BASE_URL=https://example.com -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

# docker run -d -p 3000:8080 -e WEBUI_AUTH=False -v open-webui:/app/backend/data --name open-webui ghcr.io/open-webui/open-webui:main

This docker-compose.yml will make it easier if you dont have Ollama running yet as container:

Use Ollama and OpenWebUI with one Stack⏬

#version: '3'

services:

ollama:

image: ollama/ollama

container_name: ollama

ports:

- "11434:11434"

volumes:

- ollama_data:/root/.ollama

ollama-webui:

image: ghcr.io/ollama-webui/ollama-webui:main

container_name: ollama-webui

ports:

- "3000:8080" # 3000 is the port that you will access in your browser

# add-host:

# - "host.docker.internal:host-gateway"

volumes:

- ollama-webui_data:/app/backend/data

restart: always

volumes:

ollama_data:

ollama-webui_data:

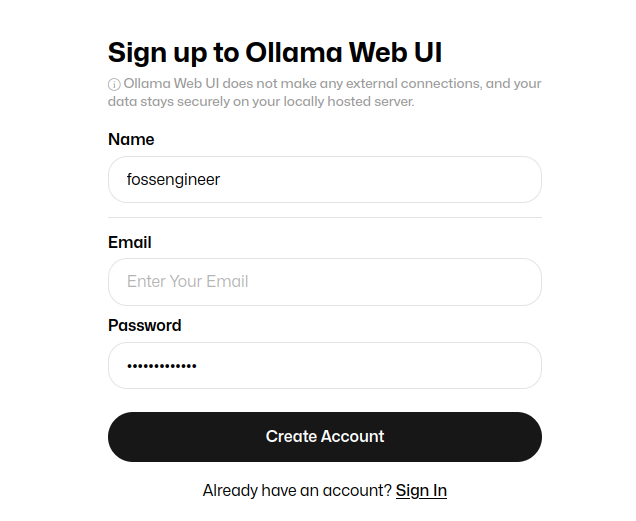

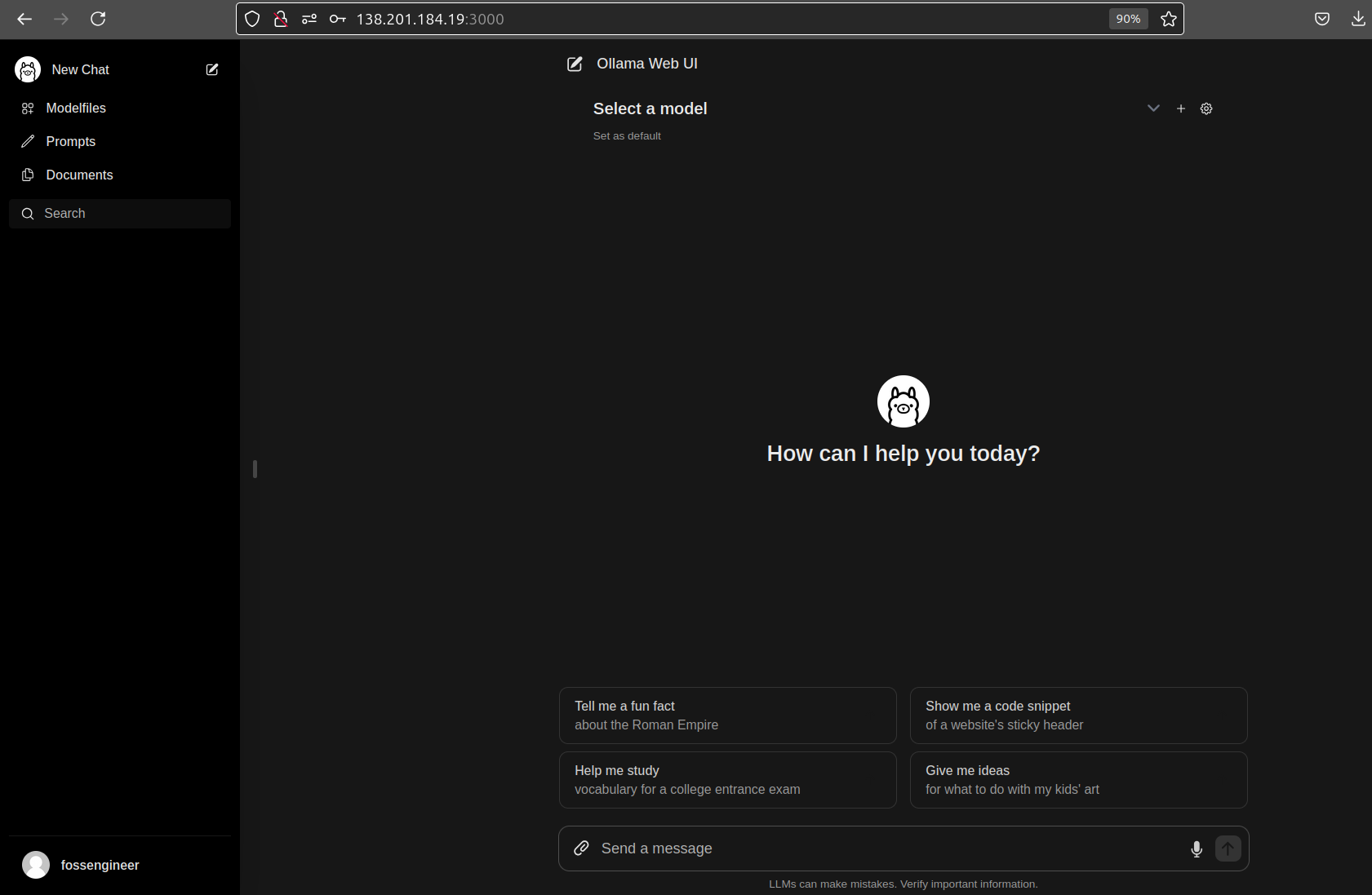

Once both containers are running, you will be able to setup a Open Web UI admin user.

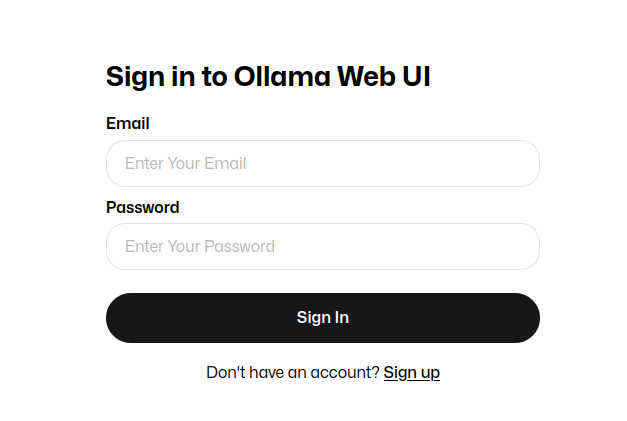

And then just go through:

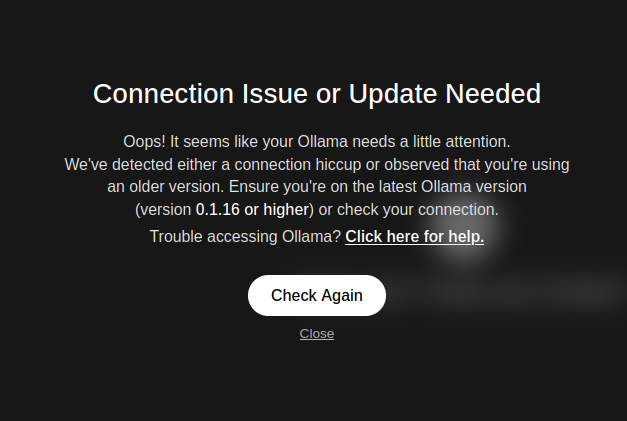

Then, you might see such warning:

Which you can validate by going into ollama container:

docker exec -it ollama /bin/bash

#podman exec -it ollama /bin/bash

And do simply:

ollama --version

#ollama version is 0.5.7-0-ga420a45-dirty

So just make sure that both container can speak:

#version: "3.8"

services:

ollama:

image: ollama/ollama

container_name: ollama

ports:

- "11434:11434" # Expose only if needed from outside the Docker network

volumes:

- ollama_data:/root/.ollama

networks:

- my-network

ollama-webui:

image: ghcr.io/ollama-webui/ollama-webui:main

container_name: ollama-webui

ports:

- "3000:8080" #The UI!

networks:

- my-network

environment:

- WEBUI_AUTH=False

- OLLAMA_BASE_URL=http://ollama:11434 # Use the container name as hostname

networks:

my-network:

volumes:

ollama_data:

ollama-webui_data:

FAQ

Free Options to Chat with your Documents

- PrivateGPT

- LogSeq

- Reor - https://github.com/reorproject/reor

Free & Open Source Ways to Interact with LLMs

- TextgenwebUI

- KoboldCPP

- GPT4 ALL (requires python)

How to make LLMs work together

- CrewAI

- Fabric

How to make LLMs code for you

- Devin

- https://github.com/VRSEN/agency-swarm-lab

- Smoldev

- Tabby

- Continue