Why Ollama with UI

Ollama is an amazing F/OSS project that allow us to spin up local LLMs for free and with few commands, similar for the ones we use to use Docker containers.

And when you think that this is it. Then you come around another project built on top - Ollama Web UI.

As you can image, you will be able to use Ollama, but with a friendly user interface on your browser.

The Ollama Web UI Project

SelfHosting Ollama Web UI

Pre-Requisites - Get Docker! 👇

Important step and quite recommended for any SelfHosting Project - Get Docker Installed

It will be one command, this one, if you are in Linux:

apt-get update && sudo apt-get upgrade && curl -fsSL https://get.docker.com -o get-docker.sh

sh get-docker.sh && docker version

And also make sure to have Ollama ready on your computer! You can do it via regular instalation or with Docker.

You will now that you are ready to go if you hit: http://127.0.0.1:11434/

Ollama Web UI Docker Compose

And the Ollama Web UI project gives us already the build Docker Image.

We just need this Docker-Compose configuration to deploy as a Stack with Portainer

Use Ollama and OpenWebUI with one Stack⏬

This will make it easier if you dont have Ollama running yet as container.

#version: '3'

services:

ollama:

image: ollama/ollama

container_name: ollama

ports:

- "11434:11434"

volumes:

- ollama_data:/root/.ollama

ollama-webui:

image: ghcr.io/ollama-webui/ollama-webui:main

container_name: ollama-webui

ports:

- "3000:8080" # 3000 is the port that you will access in your browser

add-host:

- "host.docker.internal:host-gateway"

volumes:

- ollama-webui_data:/app/backend/data

restart: always

volumes:

ollama_data:

ollama-webui_data:

It will pull the container image from Github Container Registry:

version: '3'

services:

ollama-webui:

image: ghcr.io/ollama-webui/ollama-webui:main

container_name: ollama-webui

ports:

- "3000:8080" #3000 is the port that you will acess in your browser

add-host:

- "host.docker.internal:host-gateway"

volumes:

- ollama-webui:/app/backend/data

restart: always

Once deployed, just go to: http://127.0.0.1:3000

Remember that you can choose any other Free port than

3000in your computer.

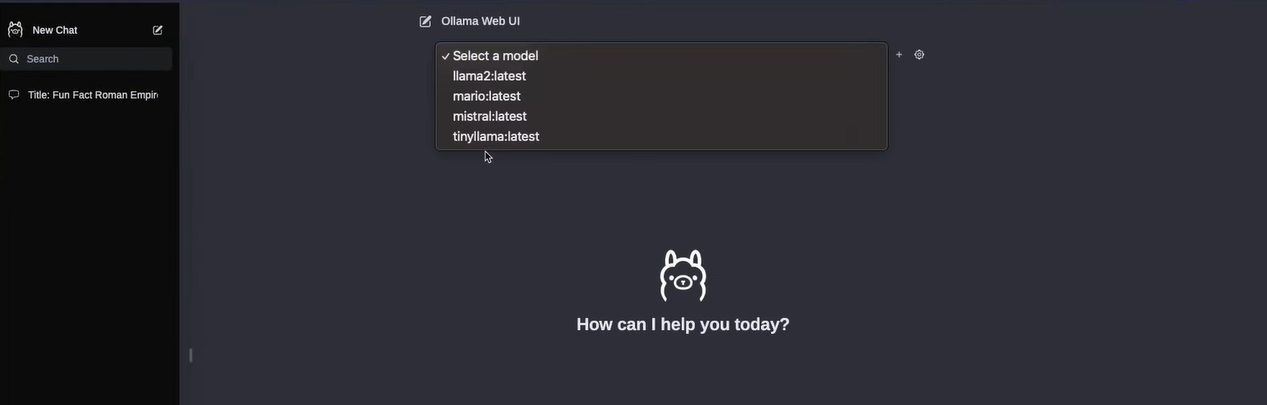

And you will see this beautiful UI to interact with Ollama locally:

Now is your time to make the most out of Free and Local LLMs - This time even more user friendly.

FAQ

Ollama can run models from Ollama Library, Hugging Face or GGUF (which are optimized to be run locally with CPU,GPU..)

How to use Ollama with https?

You can use NGINX as reverse proxy together with Ollama Docker container. NGINX will create the SSL certificates and you can expose OLLama safely if you need to.

If you are interested in deploying a separated NGINX instance with Docker, I already created a guide for that here.

Great LLMs for Ollama

- For general purpose

- For Vision