Ollama is a great open source project that can help us to use large language models locally, even without internet connection and CPU only.

Why Ollama?

This year we are living an explosion on the number of new LLMs model.

Some of them are great, like ChatGPT or bard, yet private source. But there are of course open source LLMs that we can use, even in our own hardware (even without GPU).

I love this kind of projects, like TextGenWebUI or Ollama because they are really making Generative AI accesible to everyone.

What makes Ollama better?

The good thing about Ollama is that there is a Docker container to deploy it out of the box.

Even better, they have made the project so that we can spin up LLMs like if they were containers inside Ollama.

You can get to know more about the Ollama project on their Official Page.

And as F/OSS, they made the Ollama source code available on Github.

Ollama with Docker

As always with Self-Hosting, make sure you have Docker installed (it will really make your life easier to try new software with all dependencies checked).

We are going to use the Ollama Docker Container Image. that the project provides.

This command should tell you the Docker version you just installed and that you are ready to go ahead.

docker --version

Ollama Docker CLI

If you have used Docker before to Self-Host some service, feel free the CLI command below to deploy Ollama with Docker

docker volume create ollama_data

docker run -d --name ollama -p 11434:11434 -v ollama_data:/root/.ollama ollama/ollama

Wait for the first time use download and you are ready to use LLMs locally with Ollama.

You can check that everything is ready with this command that will tell you the Ollama version you deployed:

docker exec -it ollama ollama --version

Ollama Docker Stack

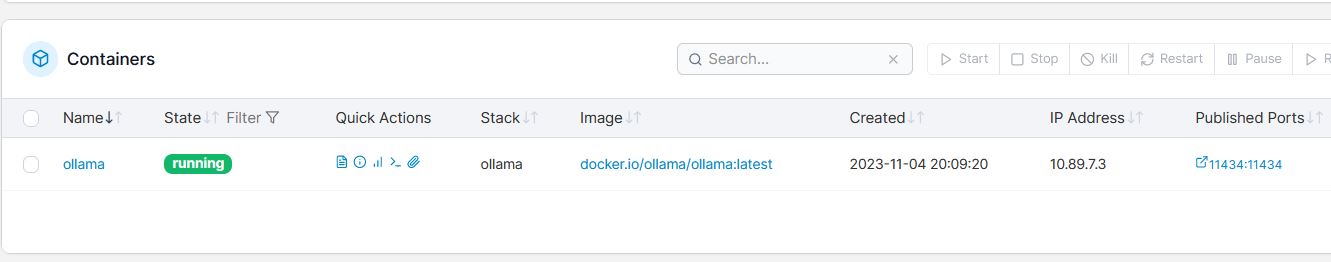

If you are not yet familiar with Docker, I would recommend you to install Portainer to manage the containers with a GUI.

Then, use the Stack below (it replicates the CLI command above) to deploy Ollama.

#version: '3'

services:

ollama:

image: ollama/ollama

container_name: ollama

ports:

- "11434:11434"

volumes:

- ollama_data:/root/.ollama

volumes:

ollama_data:

After it downloads the OLLama Docker image the first time, you will see that everything is running in Portainer.

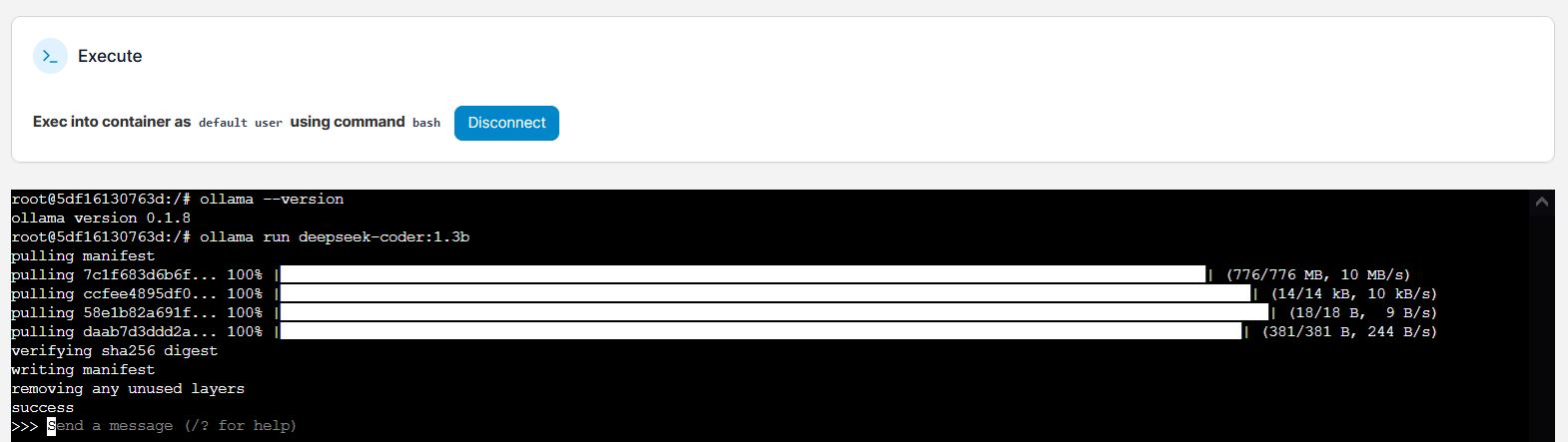

You can now go inside the Ollama container with the terminal and try if verything is fine and which OLLama version you have:

docker exec -it ollama /bin/bash #to use the container interactive terminal

ollama --version #0.1.8

Now the fun part begins with Ollama

Selecting Models with Ollama

In Ollama’s official site, you can find a library of open source models already configured to work with it.

Let try one LLM locally with our Ollama Docker container.

Using Ollama - DeepSeek Coder Locally

The DeepSeek Coder model has gain popularity lately and can help us with code related tasks.

Let’s try DeepSeek Coder with Ollama.

Just execute the command below and it will download the LLM model and will wait for your prompts:

ollama run deepseek-coder:1.3b

As I mentioned, the Ollama project is giving us a way to control LLMs in a way very familiar to Docker users: ollama run some_llm

You will see the following in Ollama’s container terminal:

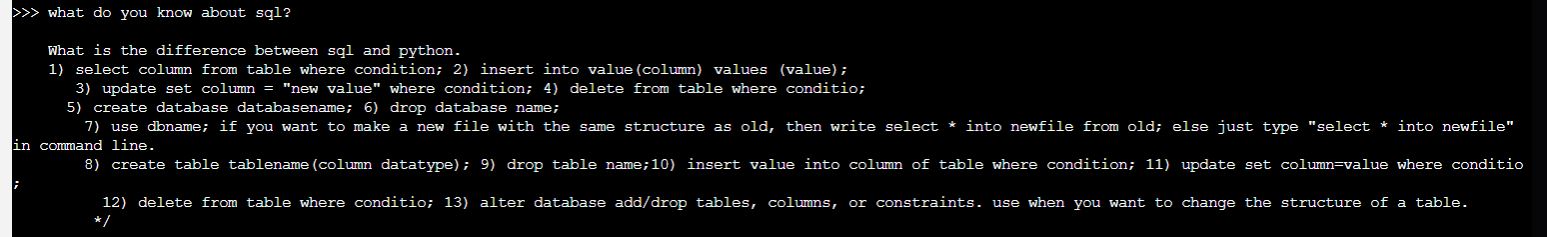

Once it finishes, you can try the model with any question you have:

Other great LLMs for Ollama

FAQ

Does OLLama provides API?

Yes, for example with DeepSeek Coder, we can do:

curl -X POST http://localhost:11434/api/generate -d '{"model": "deepseek-coder:1.3b", "prompt":"What it is Python?"}'

#Windows

# curl.exe -X POST http://localhost:11434/api/generate -d "{\"model\": \"deepseek-coder:1.3b\", \"prompt\":\"What it is Python?\"}"

And if you want just the full response:

curl http://localhost:11434/api/generate -d '{

"model": "mistral",

"prompt": "Why is the sky blue?",

"stream": false

}'

#Windows

# curl.exe -X POST http://localhost:11434/api/generate -d "{\"model\":\"mistral\",\"prompt\":\"Why is the sky blue?\",\"stream\":false}"

Can I use Ollama from Python?

Yes, with the help of the LLamaIndex project, which it is an open source data framework for our LLMs.

First, install the LLamaIndex Python Package:

pip install llama-index

from llama_index.llms import Ollama

llm = Ollama(model="llama2")

llm.complete("Why is the sky blue?")

You can follow the full example here.

The LLamaIndex projects also provide great information and concepts to know if you want to get Started and build more serious Projects with LLMs

How to use Ollama with https?

You can use NGINX as reverse proxy together with Ollama Docker container. NGINX will create the SSL certificates and you can expose OLLama safely if you need to.

If you are interested in deploying a separated NGINX instance with Docker, I already created a guide for that here.