Generative AI: LLM Locally

One of the most fascinating breakthroughs has been in generative AI, particularly those specialized in text.

These innovative models, like the artist with a blank canvas, craft sentences, paragraphs, and stories, stitching together words in ways that were once the exclusive domain of human intellect.

No longer just tools for querying databases or executing commands, these AI are akin to novelists, poets, and playwrights,furthermore, they are equipped with the ability to program and even create full projects on their own.

-

GPT-3 and GPT-4 from OpenAI are two of the most well-known LLMs.

- They are both large language models with billions of parameters, and they can be used for a variety of tasks, such as generating text, translating languages, and writing different kinds of creative content.

-

PaLM (Pathways Language Model) is a 540 billion parameter LLM from Google AI. It is one of the largest LLMs ever created, and it can perform a wide range of tasks, including question answering, coding, and natural language inference.

Other Models, some are F/OSS! 🦒 👇

-

LaMDA (Language Model for Dialogue Applications) is a 137 billion parameter LLM from Google AI. It is designed specifically for dialogue applications, such as chatbots and virtual assistants.

-

Chinchilla is a 300 billion parameter LLM from DeepMind. It is one of the most efficient LLMs available, and it can be used for a variety of tasks, such as machine translation and text summarization.

-

LLaMA (Large Language Model Meta AI) is a 65 billion parameter LLM from Meta AI. It is designed to be more accessible than other LLMs, and it is available in smaller sizes that require less computing power.

Llama has also spawned a number of open source derivatives:

- Vicuna is a 33 billion parameter LLM that is based on LLaMA. It is fine-tuned on a dataset of human conversations, and it is designed for dialogue applications.

- Orca is a 13 billion parameter LLM that is based on LLaMA. It is designed to be efficient and easy to use, and it can be used for a variety of tasks, such as text generation, translation, and question answering.

- Guanaco is a family of LLMs that are based on LLaMA. They come in a variety of sizes, from 7 billion to 65 billion parameters. They are designed for a variety of tasks, such as machine translation, question answering, and natural language inference.

- More Open Source models? Have a look as well to Falcon, Alpaca,…

While the promise of this technology sounds almost like science fiction and there’s considerable hype surrounding it, there’s truly no better way to understand its capabilities than to experience it firsthand.

So, why merely read about it when you can delve into its intricate workings yourself?

Let’s demystify the buzz and see what these models are genuinely capable of.

In this post, I’ll guide you on how to interact with these state-of-the-art LLM models locally, and the best part? You can do it for free and using just the CPU.

Using LLMs Locally

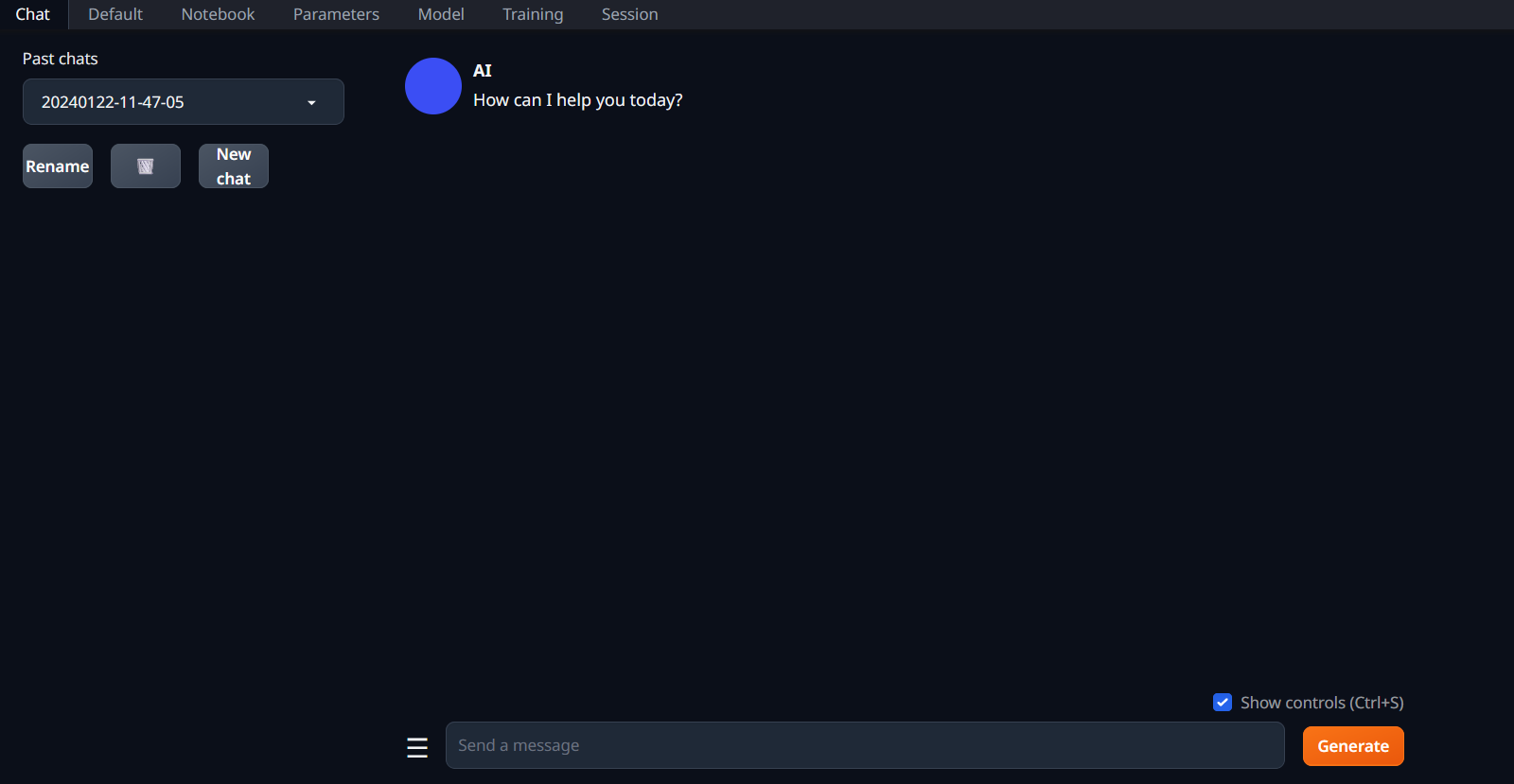

We need an interface to use our LLMs and there is a perfect project that uses a Gradio Web UI.

What it is Gradio

Gradio is a fantastic open-source Python library designed to streamline the creation of user interfaces (UIs) for various purposes.

Share delightful machine learning apps, all in Python

The Text Generation Web UI Project

In general the instructions of this project work and we can replicate it fairly easy, but I thought to simplify the dependencies setup with Docker.

So what you will need is:

- Install Docker

- Install Portainer - This will make easier the container management.

- Have a look to the fantastic Oobabooga’s Project

- The container built is based on the instructions of this repo

- Decide which LLM Model you want to use: have a look to huggingface

SelfHosting TextGenerationWebUI

I already created the container and pushed it to dockerhub to avoid the quite long waiting time of dependencies installations etc.

With CPU, you will probably need this pytorch version:

pip3 install --pre torch torchvision torchaudio --index-url https://download.pytorch.org/whl/nightly/cpu

The docker-compose / Stack to use in Portainer is as simple as this and we get TextGenWebUI

#version: '3'

services:

genai_text:

image: fossengineer/oobabooga_cpu

container_name: genai_ooba

ports:

- "7860:7860"

working_dir: /app

command: tail -f /dev/null #keep it running

volumes: #Choose your way

# - C:/Path/to/Models/AI/Docker_Vol:/app/text-generation-webui/models

# - /home/AI_Local:/app/text-generation-webui/models

# - appdata_ooba:/app/text-generation-webui/models

# volumes:

# appdata_ooba:

I want to build my own 🐳 👇

For CPU, this is what you need:

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cpu

pip install -r requirements_cpu_only.txt

#python server.py --listen

Which can be captured with:

# Use the specified Python base image

FROM python:3.11-slim

# Set the working directory in the container

WORKDIR /app

# Install necessary packages

RUN apt-get update && apt-get install -y \

git \

build-essential

# Install PyTorch, torchvision, and torchaudio

RUN pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cpu

# Clone the private repository

RUN git clone https://github.com/oobabooga/text-generation-webui

WORKDIR /app/text-generation-webui

# Install additional Python requirements from a requirements file

#COPY requirements_cpu_only.txt .

RUN pip install -r requirements_cpu_only.txt

#podman build -t textgenwebui .

Then use this stack to deploy:

#version: '3'

services:

genai_textgenwebui:

image: textgenwebui

container_name: textgenwebui

ports:

- "7860:7860"

working_dir: /app/text-generation-webui

command: python server.py --listen #tail -f /dev/null #keep it running

volumes: #Choose your way

# - C:\Users\user\Desktop\AI:/app/text-generation-webui/models

# - /home/AI_Local:/app/text-generation-webui/models

- appdata_ooba:/app/text-generation-webui/models

volumes:

appdata_ooba:

This will spin up a docker container with Python and Oobabooga’s Web UI dependencies already installed.

The Gradio UI to interact with LLMs is now ready at: localhost:7860

Inside this container, we just miss one thing, the LLM models: for that, download it in your PC and setup the proper Bind volume in the docker yml file above, so that the container is able to see the .bin files.

Adding a LLM Model

- You can Try with GGUF models are a single file and should be placed directly into models.

- GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML

- Thanks to https://github.com/ggerganov/llama.cpp you can convert from .HF/.GGML/Lora to

.gguf

- The remaining model types (like 16-bit transformers models and GPTQ models) are made of several files and must be placed in a subfolder.

We can try for example with Vicuna 👇

- Go to HuggingFace and download one of the models: https://huggingface.co/eachadea/ggml-vicuna-7b-1.1/tree/main

- I tried it with ggml-vic7b-uncensored-q5_1.bin

- Deploy the yml above with the folder in your system that contains the .bin file

- Then execute: conda init bash

- Restart the interactive terminal and execute the following

conda activate textgen

cd text-generation-webui

#python server.py

python server.py --listen

With those commands we activated the conda textgen environment, then navigated to the folder where all the action happens and execute the Python server (when doing it inside a docker container we need the --listen flag)

FAQ

Ways to Evaluate LLMs

- LMSYS Chatbot Arena: Benchmarking LLMs in the Wild: https://chat.lmsys.org/

- https://github.com/ray-project/llmperf - a library for validating and benchmarking LLMs

How to try Safely LLMs with Docker?

You can use a Python container and install the dependencies in a fresh environment with:

#version: '3'

services:

my-python-app:

image: python:3.11-slim

container_name: python-dev

command: tail -f /dev/null

volumes:

- python_dev:/app

working_dir: /app # Set the working directory to /app

ports:

- "8501:8501"

volumes:

python_dev: