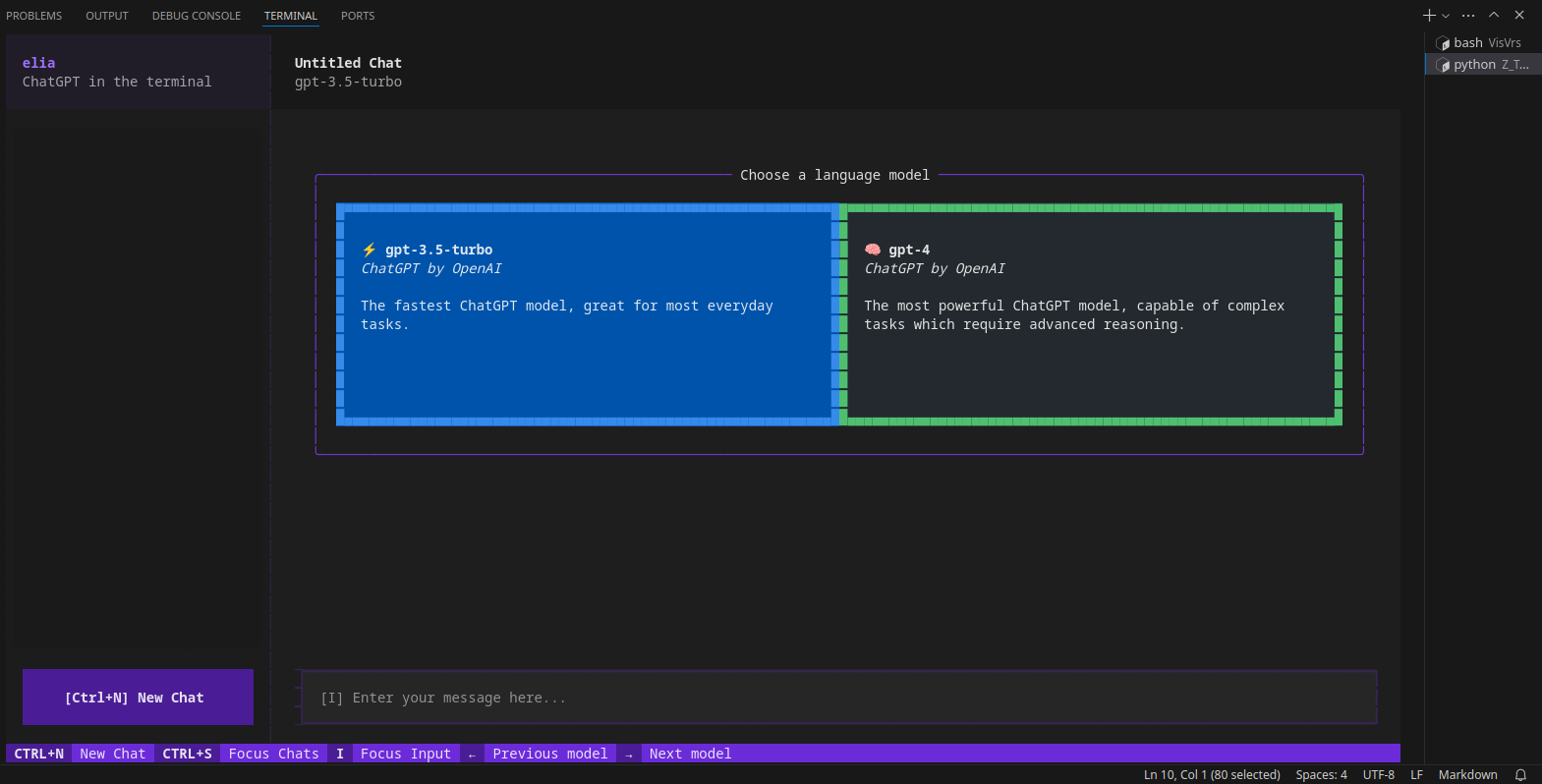

A snappy, keyboard-centric terminal user interface for interacting with large language models.

Chat with ChatGPT, Claude, Llama 3, Phi 3, Mistral, Gemma and more.

- The Elia Source Code at Github

- License: aGPL 3.0 ✅

Elia is a full TUI app that runs in your terminal though so it’s not as light-weight as llm-term, but it uses a SQLite database and allows you to continue old conversations.

Install and Run Elia as Python Application in Isolated Environments:

sudo apt install pipx #https://github.com/pypa/pipx

pipx install elia-chat

#pipx uninstall elia-chat

#pipx list

echo $PATH

#export PATH="$PATH:/home/youruser/.local/bin"

Once installed, just interact with Elia like so:

elia --help

elia chat "hi"

When Elia its done installing, you will see the following message:

# installed package elia-chat 0.4.1, installed using Python 3.10.12

# These apps are now globally available

# - elia

# ⚠️ Note: '/home/jalcocert/.local/bin' is not on your PATH environment variable. These apps will not be globally accessible until your PATH is updated. Run `pipx

# ensurepath` to automatically add it, or manually modify your PATH in your shell's config file (i.e. ~/.bashrc).

# done! ✨ 🌟 ✨

Elia is an application for interacting with LLMs which runs entirely in your terminal, and is designed to be keyboard-focused, efficient, and fun to use!

It stores your conversations in a local SQLite database, and allows you to interact with a variety of models.

Speak with proprietary models such as ChatGPT and Claude, or with local models running through ollama or LocalAI.

Elia with Open Models

Elia with Ollama

- Setup Ollama to manage LLMs like containers

- Provide the Ollama Api Endpoint to Elia

Elia with Propietary Models

Remember that the following wont be local models.

But you will be sending requests to 3rd party servers.

Elia with OpenAI ❎

Depending on the model you wish to use, you may need to set one or more environment variables (e.g. OPENAI_API_KEY, ANTHROPIC_API_KEY, GEMINI_API_KEY etc).

export OPENAI_API_KEY="sk-proj-..." #linux

#$Env:OPENAI_API_KEY = "sk-..." #PS

Using Elia with Gemini ❎

There are two main categories of Gemini models available:

-

Gemini proper: These models offer the highest level of performance and capabilities but might have limited public access due to their resource requirements.

-

Gemma models: Aimed at wider accessibility, Gemma models are essentially open-source, pre-trained versions of Gemini. They come in different sizes (e.g., Gemma 2B, Gemma 7B) offering a balance between power and efficiency. Users can run them on their own hardware or leverage Google Cloud services.

You will be redirected to Google AiStudio.

PaLM itself is not widely available to the public due to its immense computational requirements.

However, Google offers access to PaLM 2 models through:

-

Vertex AI: This allows businesses to leverage PaLM 2 capabilities with Google Cloud’s enterprise-grade security and privacy features.

-

Duet AI: This is a generative AI collaborator powered by PaLM 2, designed to assist users in learning, building, and operating various tasks faster.

Anthropic - Claude with Elia ❎

Claude Models are not an open source AI system. They were created by Anthropic through a technique called Constitutional AI.

Remember that Anthropic’s technology is proprietary and subject to change

-

Claude v1 - The original Claude model focused on being helpful, harmless, and honest.

-

Claude v2 - An upgraded model released in 2022 with improvements in having more natural conversations.

-

Claude Expert - Domain-specific versions of Claude trained in areas like coding, math, etc.

-

Claude 3 combines three models — Haiku, Sonnet, and Opus

- Claude 3 (March 2024): The most powerful version with top-level performance on complex tasks. It can handle open ended prompts and unseen scenarios with fluency and human-like understanding. Claude 3 also offers support for multiple languages including English, Spanish, and Japanese.

-

Claude 3 isn’t a single model; Anthropic offers different tiers within Claude 3 to cater to various needs and budgets: https://www.anthropic.com/news/claude-3-family

- Claude Haiku: The fastest and most cost-effective option within Claude 3. It’s ideal for lighter tasks where speed and affordability are priorities.

- Claude Basso: Offers a balance between performance and cost. It’s a good choice for users who need a powerful model for various tasks without breaking the bank.

- Claude Opus: The top-of-the-line option delivering maximum power and performance. It’s ideal for complex tasks and demanding applications.

- The Claude 3 Sonnet is free for anyone who creates a Claude account. However, if you want to test the Claude 3 Opus model, you must purchase the Claude Pro plan, which costs $20 per month.

- Context window: 200K

You can use these models from Bedrock and Vertex as well: https://docs.anthropic.com/en/docs/intro-to-claude

-

Latest AWS Bedrock:

- model name:

anthropic.claude-3-haiku-20240307-v1:0anthropic.claude-3-sonnet-20240229-v1:0anthropic.claude-3-opus-20240229-v1:0

- model name:

-

Vertex AI:

- model name:

claude-3-haiku@20240307claude-3-sonnet@20240229claude-3-opus@20240229

- model name:

Mistral with Elia

FAQ

-

Chat with PDFS ( Streamlit RAG PDF Chat ) - https://fossengineer.com/how-to-chat-with-pdfs/ ❎

-

Groq + Streamlit to summarize YT - how-to-summarize-yt-videos’ ❎

-

Chat GPT Clone (3.5/4 and 4-o ❎) - ‘how-to-create-chatgpt-clone’

F/OSS Tools to chat with Models with CLI

Octogen is an Open-Source Code Interpreter Agent Framework

python3 -m venv llms #create it

llmcli\Scripts\activate #activate venv (windows)

source llms/bin/activate #(linux)

#deactivate #when you are done

With Python and OpenAI

pip install openai

import os

from openai import OpenAI

client = OpenAI(

# This is the default and can be omitted

api_key=os.environ.get("OPENAI_API_KEY"),

)

chat_completion = client.chat.completions.create(

messages=[

{

"role": "user",

"content": "Say this is a test",

}

],

model="gpt-3.5-turbo",

)

LLM - One Shot

No Memory for previous message

pip install llm

llm keys set openai

llm models

llm models default

llm models default gpt-4o

Now chat with your model with:

llm "Five cute names for a pet penguin"

You can leverage it with pipes:

llm "What do you know about Python?" > sample.mdx

It also work with local models thanks to the GPT4All Plugin - https://github.com/simonw/llm-gpt4all

python-prompt-toolkit

It saves the entire conversation in-memory while you’re running it (every time you start a session using llm-term). However each “chat session” starts fresh and doesn’t store context from old “conversations”.

https://pypi.org/project/prompt-toolkit/

https://github.com/prompt-toolkit/python-prompt-toolkit

Library for building powerful interactive command line applications in Python