-

The Project is done in Python Streamlit ✅

-

The vector store it is Open Source (FAISS) ✅

-

This part (the LLMs) is not Free & Open Source ❎

You can always replace those with Open LLMs by using LLM’s with Ollama 🦙

The initial project is available in Github, but you can use your own Gitea repository as well.

Chat with PDF Streamlit

If you want, you can try the project first:

- Install Python 🐍

- Clone the repository

- And install Python dependencies 👇

- (Optional) - Use GH Actions to build a production ready env

git clone https://github.com/JAlcocerT/ask-multiple-pdfs/

python -m venv chatwithpdf #create it

chatwithpdf\Scripts\activate #activate venv (windows)

source chatwithpdf/bin/activate #(linux)

#deactivate #when you are done

Once active, you can just install packages as usual and that will affect only that venv:

pip install -r requirements.txt #all at once

#pip list

#pip show streamlit #check the installed version

SelfHosting a PDF Chat Bot

As always, we are going to use containers to simplify the deployment process.

Really, Just Get Docker 🐋👇

You can install Docker for any PC, Mac, or Linux at home or in any cloud provider that you wish. It will just take a few moments. If you are on Linux, just:

apt-get update && sudo apt-get upgrade && curl -fsSL https://get.docker.com -o get-docker.sh

sh get-docker.sh

#sudo apt install docker-compose -yAnd install also Docker-compose with:

apt install docker-compose -yWhen the process finishes, you can use it to self-host other services as well. You should see the versions with:

docker --version

docker-compose --version

#sudo systemctl status docker #and the statusNow, these are the things you need:

- The Dockerfile

- The requirements.txt file

- The App (Python+Streamlit, of course)

- The Docker Image ready with the App bunddled and the Docker Configuration

The Dockerfile

FROM python:3.11-slim

# Copy local code to the container image.

ENV APP_HOME /app

WORKDIR $APP_HOME

COPY . ./

RUN apt-get update && apt-get install -y \

build-essential \

curl \

software-properties-common \

git \

&& rm -rf /var/lib/apt/lists/*

# Install production dependencies.

RUN pip install -r requirements.txt

EXPOSE 8501

We will need the libraries for:

- OpenAI API

- LangChain for the RAG

- FAISS as vector store.

The Packages required for Python

streamlit==1.28.0

pypdf2==3.0.1

langchain==0.0.325

python-dotenv==1.0.0

faiss-cpu==1.7.4

openai==0.28.1

tiktoken==0.5.1

And if you want to build your own image… ⏬

docker build -t chat_multiple_pdf .

#export DOCKER_BUILDKIT=1

#docker build --no-cache -t chat_multiple_pdf .

#docker build --no-cache -t chat_multiple_pdf . > build_log.txt 2>&1

#docker run -p 8501:8501 chat_multiple_pdf:latest

#docker exec -it chat_multiple_pdf /bin/bash

#sudo docker run -it -p 8502:8501 chat_multiple_pdf:latest /bin/bash

You can make this build manually, use Github Actions, or your can even combine Gitea and Jenkins to do it for you.

PDF Chat Bot - Docker Compose

With this Docker Compose below, you will be using the x86 Docker Image created by the CI/CD of Github Actions

version: '3'

services:

streamlit-embeddings-pdfs:

image: ghcr.io/jalcocert/ask-multiple-pdfs:v1.0 #chat_multiple_pdf / whatever name you built

container_name: chat_multiple_pdf

volumes:

- ai_chat_multiple_pdf:/app

working_dir: /app # Set the working directory to /app

command: /bin/sh -c "export OPENAI_API_KEY='your_openai_api_key_here' && export HUGGINGFACE_API_KEY='your_huggingface_api_key_here' && streamlit run app.py"

#command: tail -f /dev/null

ports:

- "8501:8501"

volumes:

ai_chat_multiple_pdf:

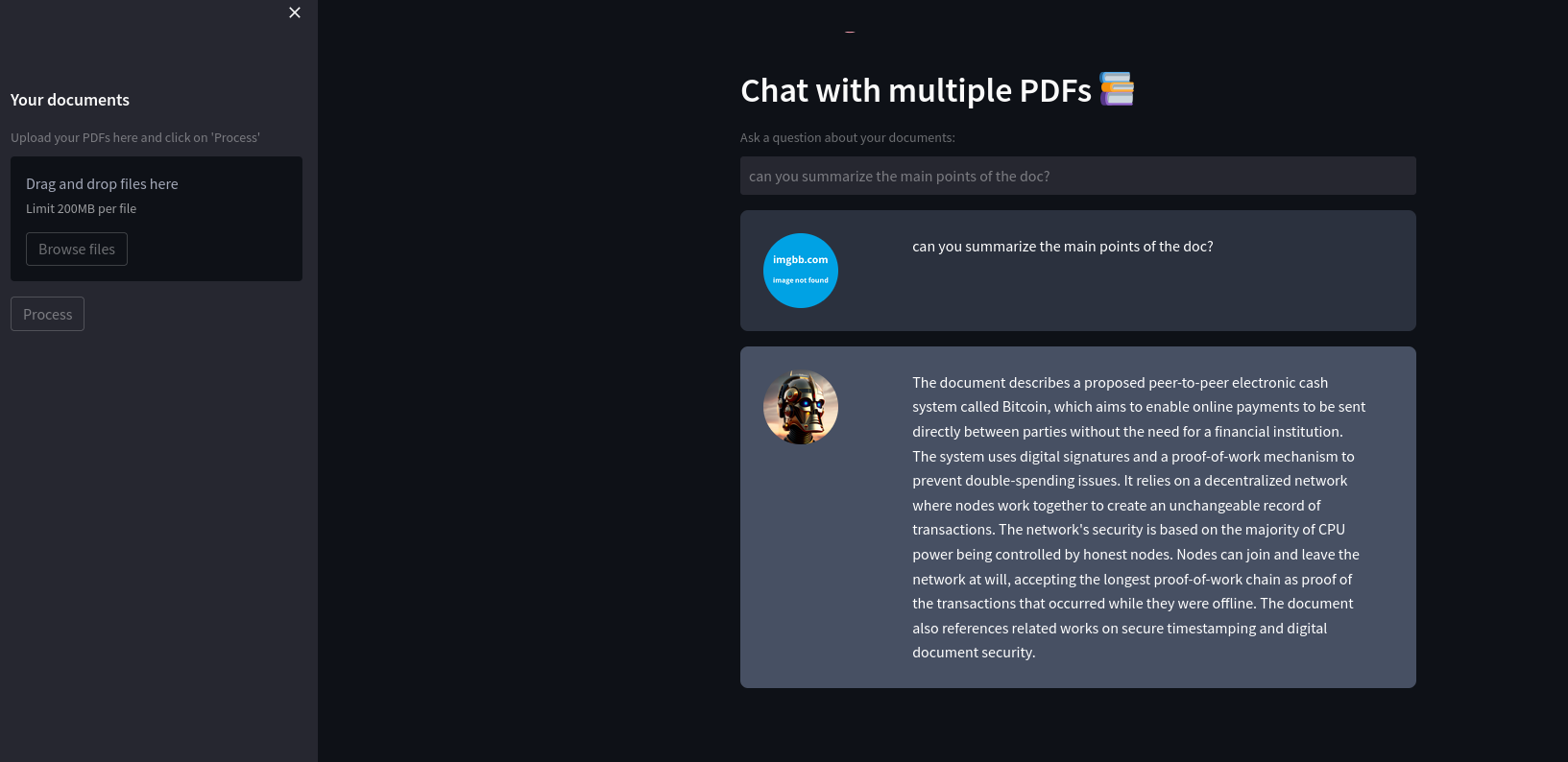

If you followed along, the PDF chat it is available at localhost:8501 and looks like:

FAQ

How to use Github Actions to Build a Streamlit Image

The project has a GH Actions Worflow created that will push a new image to the Github Container Registry whenever a new push of the code is submited.

The Workflow Configuration File 👇

name: CI/CD Pipeline

on:

push:

branches:

- main

jobs:

build-and-push:

runs-on: ubuntu-latest

steps:

- name: Checkout repository

uses: actions/checkout@v2

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v1

- name: Login to GitHub Container Registry

uses: docker/login-action@v1

with:

registry: ghcr.io

username: ${{ github.actor }}

password: ${{ secrets.CICD_TOKEN_AskPDF }} #Settings -> Dev Settings -> PAT's -> Tokens +++ Repo Settings -> Secrets & variables -> Actions -> New repo secret

- name: Build and push Docker image

uses: docker/build-push-action@v2

with:

context: .

push: true

tags: ghcr.io/jalcocert/ask-multiple-pdfs:v1.0

Copy it to your project and get the most out of GH Actions

Other FREE AI Tools to Chat with Docs

- PrivateGPT - The PrivateGPT Project works with Docker and with local and open Models out of the box./

Other FREE Vector Stores

This project uses FAISS as Vector Database, but there are other F/OSS alternatives:

- ChromaDB - https://fossengineer.com/selfhosting-chromadb-docker/

- https://fossengineer.com/selfhosting-vector-admin-docker/#the-vector-admin-project

RAG Frameworks

RAG is a technique for enhancing the capabilities of large language models (LLMs) by allowing them to access and process information from external sources.

- It follows a three-step approach:

- Retrieve: Search for relevant information based on the user query using external sources like search engines or knowledge bases.

- Ask: Process the retrieved information and potentially formulate additional questions based on the context.

- Generate: Use the retrieved and processed information to guide the LLM in generating a more comprehensive and informative response.

Sometime ago, I was covering the EmbedChain RAG Framework

LangChain

This Streamlit Project is using LangChain as RAG - with its core focus on the retrieval aspect of the RAG pipeline:

A Python Library to Build context-aware reasoning applications

Why or Why not LangChain as RAG? ⏬

- Provides a high-level interface for building RAG systems

- Supports various retrieval methods, including vector databases and search engines

- Offers a wide range of pre-built components and utilities for text processing and generation

- Integrates well with popular language models like OpenAI’s GPT series

But it is not the only F/OSS option…

LLama Index

Why LLama Index as RAG? ⏬

- Designed specifically for building index-based retrieval systems

- Provides a simple and intuitive API for indexing and querying documents

- Supports various indexing techniques, including vector-based and keyword-based methods

- Offers built-in support for common document formats (e.g., PDF, HTML)

- Lightweight and easy to integrate into existing projects

Primarily focused on indexing and retrieval, may lack advanced generation capabilities

LangFlow

Langflow is a visual framework for building multi-agent and RAG applications. It’s open-source, Python-powered, fully customizable, model and vector store agnostic.

Why (or Why not) LangFlow as RAG? ⏬

- LangFlow - Pros:

- Offers a visual programming interface for building RAG pipelines

- Allows for easy experimentation and prototyping without extensive coding

- Provides a library of pre-built nodes for various tasks (e.g., retrieval, generation)

- Supports integration with popular language models and libraries

- Enables rapid development and iteration of RAG systems

- Cons:

- May have limitations in terms of customization and fine-grained control compared to code-based approaches

- Visual interface may not be suitable for complex or large-scale projects

- Dependency on the LangFlow platform and its ecosystem