The API offered by ‘Groq, Inc.’ for their Language Processing Unit (LPU) hardware - AI hardware specifically designed to accelerate performance for natural language processing (NLP) tasks.

Thanks to the Groq LPU API we have a programmatic way for developers to interact with the LPU and leverage its capabilities for their applications.

- The PhiData Project is available on GitHub

- Project Source Code at GitHub

- It is Licensed under the Mozilla Public License v2 ✅

- Project Source Code at GitHub

Build AI Assistants with memory, knowledge and tools.

Today, we are going to use Groq API with F/OSS Models to…chat with a Youtube video.

| Information | Details |

|---|---|

| API Key from Groq | You will need an API key from Groq to use the project. |

| Model Control | The models might be open, but you won’t have full local control over them - send queries to a 3rd party. ❎ |

| Get API Keys | Get the Groq API Keys |

| LLMs | The LLMs that we will run are open sourced ✅ |

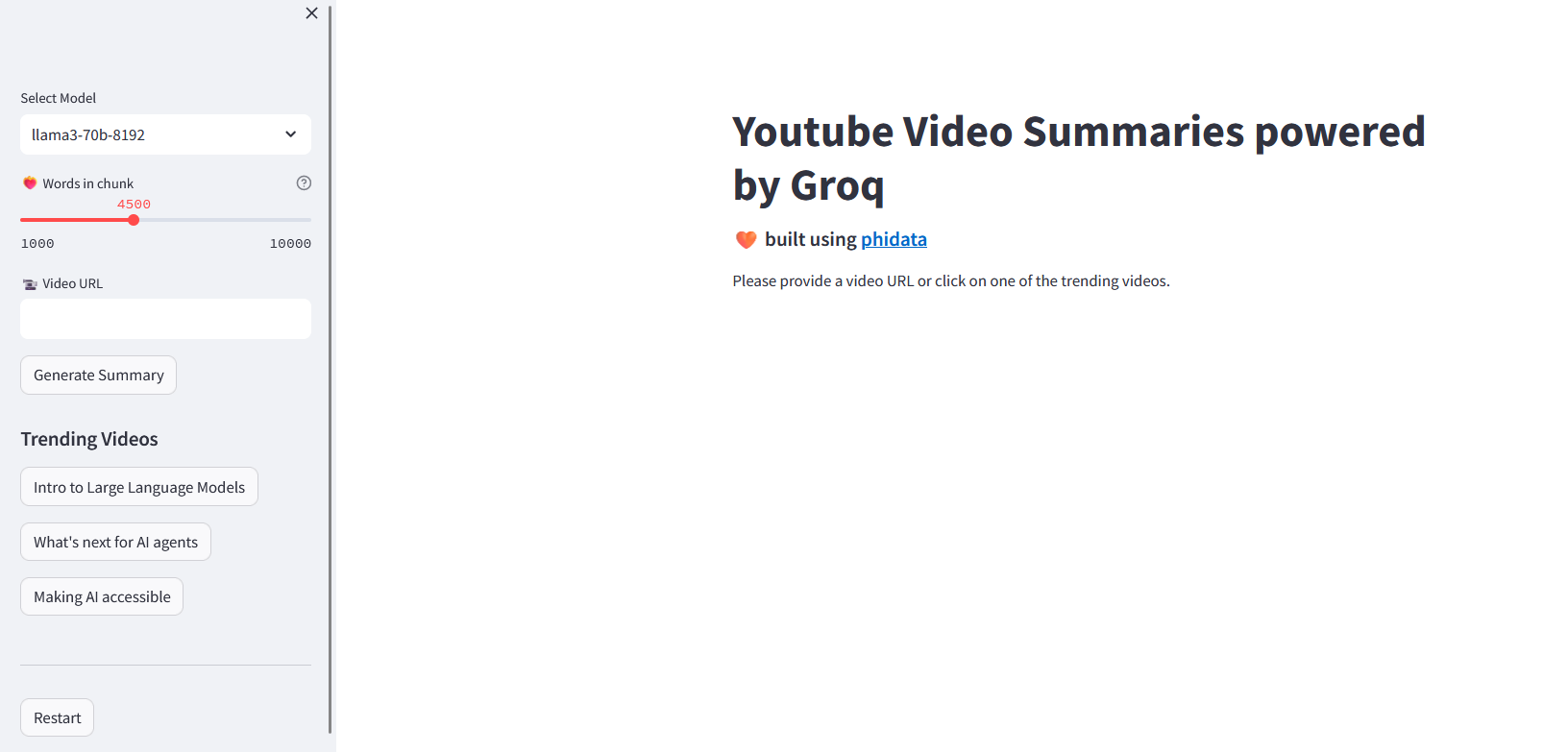

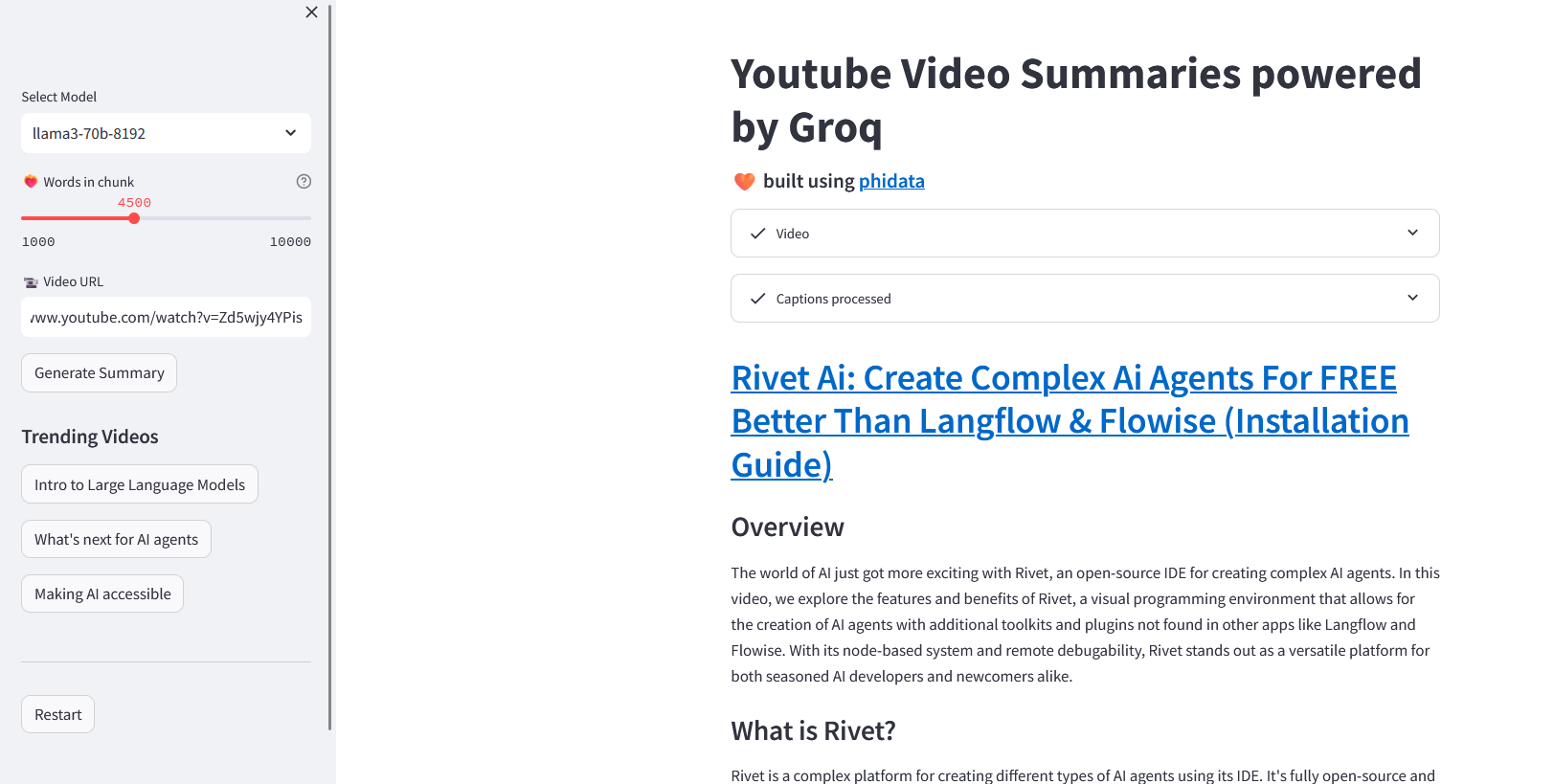

This is what we will get:

And there are many more interesting projects in their repository!

SelfHosting Groq Video Summaries

The phi-data repository contains several sample applications like this one, but our focus today will be at: phidata/cookbook/llms/groq/video_summary

It is very simple to get Youtube video summaries with Groq, we are going to do it systematically with Docker.

Really, Just Get Docker 🐋👇

You can install Docker for any PC, Mac, or Linux at home or in any cloud provider that you wish. It will just take a few moments. If you are on Linux, just:

apt-get update && sudo apt-get upgrade && curl -fsSL https://get.docker.com -o get-docker.sh

sh get-docker.sh

#sudo apt install docker-compose -yAnd install also Docker-compose with:

apt install docker-compose -yWhen the process finishes, you can use it to self-host other services as well. You should see the versions with:

docker --version

docker-compose --version

#sudo systemctl status docker #and the statusWe will use this Dockerfile to capture the App we are interested from the repository and bundle it:

FROM python:3.11

# Install git

RUN apt-get update && apt-get install -y git

# Set up the working directory

#WORKDIR /app

# Clone the repository

RUN git clone --depth=1 https://github.com/phidatahq/phidata && \

cd phidata && \

git sparse-checkout init && \

git sparse-checkout set cookbook/llms/groq/video_summary && \

git pull origin main

WORKDIR /phidata

# Install Python requirements

RUN pip install -r /phidata/cookbook/llms/groq/video_summary/requirements.txt

#RUN sed -i 's/numpy==1\.26\.4/numpy==1.24.4/; s/pandas==2\.2\.2/pandas==2.0.2/' requirements.txt

# Set the entrypoint to a bash shell

CMD ["/bin/bash"]

And now we just have to build our Docker Image with the Groq Youtube Summarizer App:

docker build -t phidata_yt_groq .

sudo docker-compose up -d

#docker-compose -f phidata_yt_groq_Docker-compose.yml up -d

docker exec -it phidata_yt_groq /bin/bash

Now, you are inside the built container.

Just execute the Streamlit app to test it:

#docker or podman run

docker run -d --name=phidata_yt_groq -p 8502:8501 -e GROQ_API_KEY=your_api_key_here \

phidata:yt_summary_groq streamlit run cookbook/llms/groq/video_summary/app.py

# podman run -d --name=phidata_yt_groq -p 8502:8501 -e GROQ_API_KEY=your_api_key_here \

# phidata:yt_summary_groq tail -f /dev/null

How to use Github Actions to build a multi-arch Container Image ⏬

If you are familiar with github actions, you will need the following workflow:

name: CI/CD Build MultiArch

on:

push:

branches:

- main

jobs:

build-and-push:

runs-on: ubuntu-latest

steps:

- name: Checkout repository

uses: actions/checkout@v2

- name: Set up QEMU

uses: docker/setup-qemu-action@v1

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v1

- name: Login to GitHub Container Registry

uses: docker/login-action@v1

with:

registry: ghcr.io

username: ${{ github.actor }}

password: ${{ secrets.CICD_TOKEN_YTGroq }}

- name: Set lowercase owner name

run: |

echo "OWNER_LC=${OWNER,,}" >> $GITHUB_ENV

env:

OWNER: '${{ github.repository_owner }}'

- name: Build and push Docker image

uses: docker/build-push-action@v2

with:

context: .

push: true

platforms: linux/amd64,linux/arm64

tags: |

ghcr.io/${{ env.OWNER_LC }}/phidata:yt-groq

It uses QEMU together with docker buildx command to build x86 and ARM64 container images for you.

And once you are done, deploy it as a Docker Compose Stack:

version: '3.8'

services:

phidata_service:

image: phidata_yt_groq #ghcr.io/jalcocert/phidata:yt-groq

container_name: phidata_yt_groq

ports:

- "8501:8501"

environment:

- GROQ_API_KEY=your_api_key_here #your_api_key_here 😝

command: streamlit run cookbook/llms/groq/video_summary/app.py

#command: tail -f /dev/null # Keep the container running

# networks:

# - cloudflare_tunnel

# - nginx_default

# networks:

# cloudflare_tunnel:

# external: true

# nginx_default:

# external: true

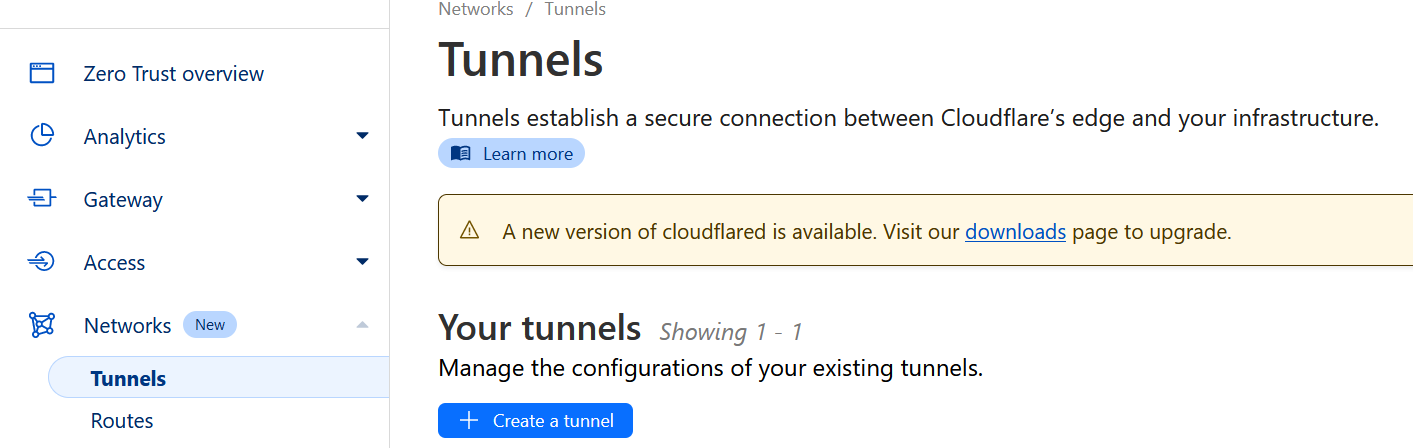

For Production deployment, you can use NGINX or Cloudflare Tunnels to get HTTPs

How to SelfHost the Streamlit AI App with Cloudflare ⏬

- Visit -

https://one.dash.cloudflare.com - Hit Tunnels (Network section)

- Create a new Tunnel

And make sure to place the name of the container and its internal port:

Conclusion

Now we have our Streamlit UI at: localhost:8501

Feel free to ask for Summaries about Youtube Videos with Groq:

Similar AI Projects 👇

- Using PrivateGPT to Chat with your Docs Locally ✅

- Using Streamlit + OpenAI API to chat with your PDF Docs

Groq vs Others

| Service | Description |

|---|---|

| Ollama | A free and open-source AI service that features local models, allowing users to host their own machine learning models. Ideal for users who prefer to keep data on their own servers. |

| Text Generation Web UI | A free and open-source AI service using local models to generate text. Great for content creators and writers needing quick text generation. |

| Mistral | AI service specializing in creating personalized user experiences using machine learning algorithms to understand behavior and preferences. |

| Grok (Twitter) | AI service by Twitter for data analysis and pattern recognition, primarily used for analyzing social media data to gain insights into user behavior. |

| Gemini (Google) | Google’s AI service providing APIs for machine learning and data analysis. |

| Vertex AI (Google) | Google’s AI service offering tools for data scientists and developers to build, deploy, and scale AI models. |

| AWS Bedrock | Amazon’s foundational system for AI and ML services, offering a wide range of tools for building, training, and deploying machine learning models. |

| Anthropic (Claude) | Research-oriented AI service by Anthropic, aiming to build models that respect human values. Access their API and manage your keys in their console. |

| API, Console |

Adding Simple Streamlit Auth

We can use this simple package: https://pypi.org/project/streamlit-authenticator/

A secure authentication module to validate user credentials in a Streamlit application.

import streamlit as st

from Z_Functions import Auth_functions as af

def main():

if af.login():

# Streamlit UI setup

st.title("Portfolio Dividend Visualization")

if __name__ == "__main__":

main()

It is a referencing a Auth_functions.py in another file:

Define Auth_functions.py in a separate file with ⏬

import streamlit_authenticator as stauth

# Authentication function #https://github.com/naashonomics/pandas_templates/blob/master/login.py

def login():

names = ['User Nick 1 🐷', 'User Nick 2']

usernames = ['User 1', 'User 2']

passwords = ['SomePassForUser1', 'anotherpassword']

hashed_passwords = stauth.Hasher(passwords).generate()

authenticator = stauth.Authenticate(names, usernames, hashed_passwords,

'some_cookie_name', 'some_signature_key', cookie_expiry_days=1)

name, authentication_status, username = authenticator.login('Login', 'main')

if authentication_status:

authenticator.logout('Logout', 'main')

st.write(f'Welcome *{name}*')

return True

elif authentication_status == False:

st.error('Username/password is incorrect')

elif authentication_status == None:

st.warning('Please enter your username (🐷) and password (💰)')

return False

FAQ

| What’s Trending Now? | Description |

|---|---|

| Toolify | A platform that showcases the latest AI tools and technologies, helping users discover trending AI solutions. |

| Future Tools | A website dedicated to featuring the most cutting-edge and trending tools in the tech and AI industry. |

How to use Groq API Step by Step with Python ⏬

Thanks to TirendazAcademy, we have a step by step guide to know how to make queries to Groq API via Python:

- https://github.com/TirendazAcademy/LangChain-Tutorials/blob/main/Groq-Api-Tutorial.ipynb - We can even use Google Colab!

!pip install -q -U langchain==0.2.6 langchain_core==0.2.10 langchain_groq==0.1.5 gradio==4.37.1

from google.colab import userdata

groq_api_key = 'your_groq_API'

from langchain_groq import ChatGroq

chat = ChatGroq(

api_key = groq_api_key,

model_name = "mixtral-8x7b-32768"

)

from langchain_core.prompts import ChatPromptTemplate

system = "You are a helpful assistant."

human = "{text}"

prompt = ChatPromptTemplate.from_messages(

[

("system", system), ("human", human)

]

)

from langchain_core.output_parsers import StrOutputParser

chain = prompt | chat | StrOutputParser()

chain.invoke(

{"text":"Why is the sky blue?"}

)

Ways to Secure a Streamlit App

We have already seen a simple way with the Streamlit Auth Package.

But what if we need something more robust?

How to secure the Access for your AI Apps

- F/OSS Apps to manage Application access Management:

- Authentik

- LogTo:

| Interesting LogTo Resources | Link |

|---|---|

| Protected App Recipe | Documentation |

| Manage Users (Admin Console) Recipe | Documentation |

| Webhooks Recipe | Documentation –> Webhooks URL |

Social sign-in experience with Logto

-

Authenticate Users via Email: Easily authenticate users through email.

-

Create a Protected App: Add authentication with simplicity and speed.

- Protected App securely maintains user sessions and proxies your app requests.

- Powered by Cloudflare Workers, enjoy top-tier performance and 0ms cold start worldwide.

- Protected App Documentation

-

Video Tutorial: Learn how to build your app’s authentication in clicks, no code required.

How to install AI easily

Some F/OSS Projects to help us get started with AI:

AI Browser - MIT ❤️ Licensed!

And if you need, these are some FREE Vector Stores for AI Projects

| Project/Tool | Link |

|---|---|

| Vector Admin Project | Self-Hosting Vector Admin with Docker |

| FOSS Vector DBs for AI Projects | Self-Hosting Vector Admin with Docker |

| ChromaDB | Self-Hosting ChromaDB with Docker |